How to Construct and Analytical Framework for Asystematic Review

Chapter ii. Developing the Topic and Structuring Systematic Reviews of Medical Tests: Utility of PICOTS, Analytic Frameworks, Conclusion Trees, and Other Frameworks

Methods Guide – Chapter Jun 1, 2012

This is a chapter from AHRQ'due south Methods Guide for Medical Test Reviews.

Abstruse

Topic development and structuring a systematic review of diagnostic tests are complementary processes. The goals of a medical test review are: to place and synthesize evidence to evaluate the impacts of alternative testing strategies on health outcomes and to promote informed decisionmaking. A common challenge is that the asking for a review may land the claim for the exam ambiguously. Due to the indirect affect of medical tests on clinical outcomes, reviewers demand to place which intermediate outcomes link a medical exam to improved clinical outcomes. In this paper, nosotros suggest the use of five principles to deal with challenges: the PICOTS typology (Patient population, Intervention, Comparator, Outcomes, Timing, Setting), analytic frameworks, simple conclusion trees, other organizing frameworks, and rules for when diagnostic accuracy is sufficient.

Introduction

"[We] have the ironic situation in which important and painstakingly adult cognition often is applied haphazardly and anecdotally. Such a state of affairs, which is not acceptable in the basic sciences or in drug therapy, also should not exist acceptable in clinical applications of diagnostic technology."

J. Sanford (Sandy) Schwartz, Establish of Medicine, 19851

Developing the topic creates the foundation and structure of an effective systematic review. This procedure includes understanding and clarifying a merits nearly a test (as to how it might exist of value in practise) and establishing the key questions to guide decisionmaking related to the claim. Doing so typically involves specifying the clinical context in which the examination might exist used. Clinical context includes patient characteristics, how a new test might fit into existing diagnostic pathways, technical details of the examination, characteristics of clinicians or operators using the test, direction options, and setting. Structuring the review refers to identifying the analytic strategy that will most directly accomplish the goals of the review, accounting for idiosyncrasies of the data.

Topic evolution and structuring of the review are complementary processes. As Evidence-based Practice Centers (EPCs) develop and refine the topic, the structure of the review should become clearer. Moreover, success at this phase reduces the adventure of major changes in the scope of the review and minimizes rework.

While this chapter is intended to serve as a guide for EPCs, the processes described here are relevant to other systematic reviewers and a broad spectrum of stakeholders including patients, clinicians, caretakers, researchers, funders of research, government, employers, health intendance payers and industry, every bit well as the general public. This newspaper highlights challenges unique to systematic reviews of medical tests. For a general give-and-take of these issues as they be in all systematic reviews, we refer the reader to previously published EPC methods papers.2,3

Common Challenges

The ultimate goal of a medical test review is to identify and synthesize prove that will help evaluate the impacts on health outcomes of alternative testing strategies. Two mutual problems can impede the achievement of this goal. One is that the request for a review may state the claim for the test ambiguously. For example, a new medical examination for Alzheimer'due south disease may neglect to specify the patients who may benefit from the test—so that the test's apply ranges from a screening tool amidst the "worried well" without prove of deficit, to a diagnostic test in those with frank impairment and loss of function in daily living. The request for review may not specify the range of use to be considered. Similarly, the request for a review of tests for prostate cancer may fail to consider the role of such tests in clinical decisionmaking, such as guiding the conclusion to perform a biopsy.

Because of the indirect impact of medical tests on clinical outcomes, a 2nd problem is how to identify which intermediate outcomes link a medical test to improved clinical outcomes, compared to an existing test. The scientific literature related to the claim rarely includes direct evidence, such as randomized controlled trial results, in which patients are allocated to the relevant test strategies and evaluated for downstream health outcomes. More than commonly, evidence about outcomes in support of the merits relates to intermediate outcomes such as test accurateness.

Principles for Addressing the Challenges

Principle 1: Engage stakeholders using the PICOTS typology.

In approaching topic development, reviewers should engage in a direct dialogue with the primary requestors and relevant users of the review (herein denoted "stakeholders") to sympathize the objectives of the review in practical terms; in item, investigators should understand the sorts of decisions that the review is likely to affect. This process of appointment serves to bring investigators and stakeholders to a shared agreement about the essential details of the tests and their relationship to existing test strategies (i.eastward., whether equally replacement, triage, or add-on), range of potential clinical utility, and potential adverse consequences of testing.

Operationally, the objective of the review is reflected in the key questions, which are usually presented in a preliminary form at the outset of a review. Reviewers should examine the proposed key questions to ensure that they accurately reflect the needs of stakeholders and are likely to exist answered given the bachelor time and resources. This is a procedure of trying to residue the importance of the topic against the feasibility of completing the review. Including a wide variety of stakeholders—such every bit the U.S. Nutrient and Drug Administration (FDA), manufacturers, technical and clinical experts, and patients—can aid provide additional perspectives on the claim and use of the tests. A preliminary examination of the literature can place existing systematic reviews and clinical practise guidelines that may summarize show on current strategies for using the test and its potential benefits and harms.

The PICOTS typology (Patient population, Intervention, Comparator, Outcomes, Timing, Setting), defined in the Introduction to this Medical Examination Methods Guide (Chapter ane), is a typology for defining detail contextual issues, and this formalism can be useful in focusing discussions with stakeholders. The PICOTS typology is a vital part of systematic reviews of both interventions and tests; furthermore, their transparent and explicit structure positively influences search methods, report selection, and data extraction.

Information technology is important to recognize that the process of topic refinement is iterative and that PICOTS elements may alter every bit the clinical context becomes clearer. Despite the all-time efforts of all participants, the topic may evolve even as the review is being conducted. Investigators should consider at the outset how such a situation will be addressed.4–6

Principle two: Develop an analytic framework.

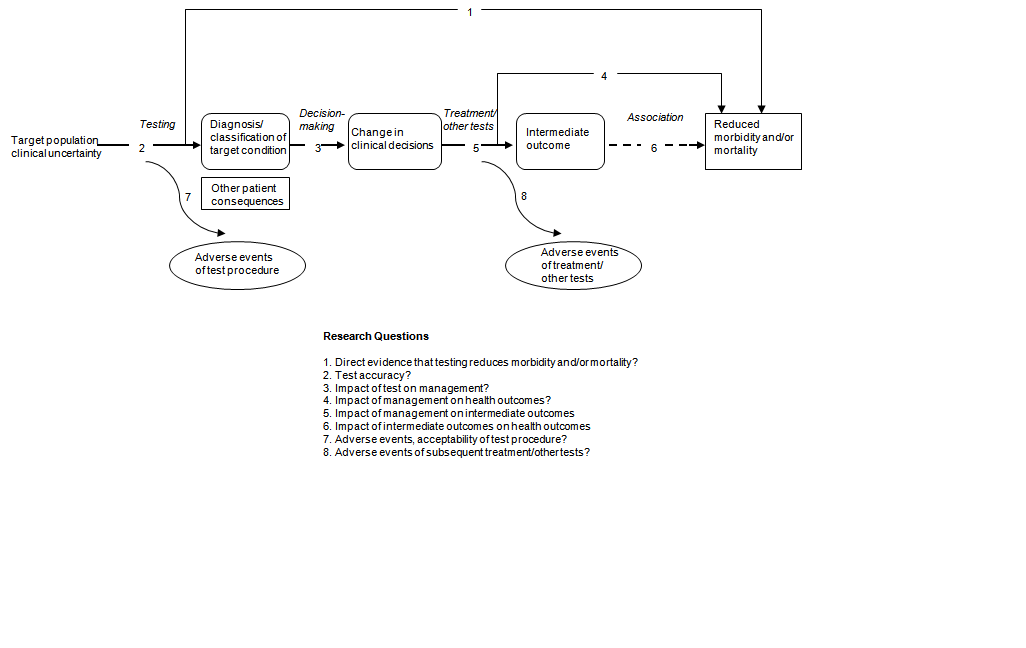

We employ the term "analytic framework" (sometimes called a causal pathway) to denote a specific form of graphical representation that specifies a path from the intervention or test of interest to all-important health outcomes, through intervening steps and intermediate outcomes.7 Amid PICOTS elements, the target patient population, intervention, and clinical outcomes are specifically shown. The intervention tin can actually be viewed as a test-and-treat strategy equally shown in links 2 through 5 of Effigy ii–1. In the figure, the comparator is non shown explicitly, but is unsaid. Each linkage relating test, intervention, or upshot represents a potential central question and, it is hoped, a coherent body of literature.

The Agency for Healthcare Enquiry and Quality (AHRQ) EPC program has described the development and use of analytic frameworks in systematic reviews of interventions. Since the impact of tests on clinical outcomes normally depends on downstream interventions, analytic frameworks for systematic reviews of tests are particularly valuable and should be routinely included. The analytic framework is developed iteratively in consultation with stakeholders to illustrate and define the important clinical decisional dilemmas and thus serves to analyze of import key questions further.ii

However, systematic reviews of medical tests nowadays unique challenge non encountered in reviews of therapeutic interventions. The analytic framework tin help users to understand how the ofttimes convoluted linkages between intermediate and clinical outcomes fit together, and to consider whether these downstream bug may exist relevant to the review. Adding specific elements to the analytic framework volition reflect the understanding gained about clinical context.

Harris and colleagues take described the value of the analytic framework in assessing screening tests for the U.South. Preventive Services Job Force (USPSTF).8 A prototypical analytic framework for medical tests as used by the USPSTF is shown in Figure ii–one. Each number in Figure 2–i can be viewed as a separate primal question that might exist included in the bear witness review.

Effigy 2–1. Awarding of USPSTF analytic framework to examination evaluation*

*Adapted from Harris et al., 2001vii

In summarizing prove, studies for each linkage might vary in strength of design, limitations of deport, and adequacy of reporting. The linkages leading from changes in patient management decisions to health outcomes are often of particular importance. The implication hither is that the value of a exam usually derives from its influence on some action taken in patient management. Although this is usually the instance, sometimes the information alone from a test may have value contained of whatever action it may prompt. For instance, data about prognosis that does not necessarily trigger any deportment may have a meaningful psychological impact on patients and caregivers.

Principle 3: Consider using decision trees.

An analytic framework is helpful when direct testify is lacking, showing relevant key questions along indirect pathways betwixt the test and important clinical outcomes. Analytic frameworks are, however, non well suited to depicting multiple alternative uses of the detail exam (or its comparators) and are limited in their ability to stand for the bear upon of test results on clinical decisions, and the specific potential upshot consequences of altered decisions. Reviewers can use simple conclusion trees or flow diagrams aslope the analytic framework to illustrate details of the potential impact of examination results on management decisions and outcomes. Forth with PICOTS specifications and analytic frameworks, these graphical tools stand for systematic reviewers' agreement of the clinical context of the topic. Constructing decision copse may help to clarify key questions by identifying which indices of diagnostic accuracy and other statistics are relevant to the clinical trouble and which range of possible pathways and outcomes practically and logically flow from a exam strategy (Run across Chapter 3, "Choosing the Important Outcomes for a Systematic Review of a Medical Test."). Lord et al. draw how diagrams resembling determination copse define which steps and outcomes may differ with dissimilar test strategies, and thus the important questions to ask to compare tests co-ordinate to whether the new test is a replacement, a triage, or an add together-on to the existing test strategy.9

One example of the utility of determination copse comes from a review of noninvasive tests for carotid artery disease.10 In this review, investigators found that common metrics of sensitivity and specificity that counted both loftier-form stenosis and complete apoplexy every bit "positive" studies would not be reliable guides to bodily test performance because the two results would be treated quite differently. This insight was subsequently incorporated into calculations of noninvasive carotid examination functioning.10–11 Additional examples are provided in the illustrations beneath. For further discussion on when to consider using determination trees, run across Chapter 10 in this Medical Exam Methods Guide, "Deciding Whether To Complement a Systematic Review of Medical Tests With Decision Modeling."

Principle 4: Sometimes information technology is sufficient to focus exclusively on accuracy studies.

Once reviewers take diagrammed the decision tree whereby diagnostic accurateness may bear upon intermediate and clinical outcomes, it is possible to determine whether it is necessary to include cardinal questions regarding outcomes beyond diagnostic accuracy. For instance, diagnostic accuracy may be sufficient when the new exam is as sensitive and as specific equally the old exam and the new test has advantages over the old exam, such as causing fewer agin effects, being less invasive, being easier to apply, providing results more apace, or costing less. Implicit in this instance is the comparability of downstream management decisions and outcomes betwixt the exam under evaluation and the comparator exam. Another example when a review may be limited to evaluation of sensitivity and specificity is when the new test is as sensitive every bit, but more than specific than, the comparator, assuasive avoidance of harms of further tests or unnecessary treatment. This situation requires the assumptions that the same cases would be detected past both tests and that treatment efficacy would be unaffected by which test was used.12

Particular questions to consider when reviewing analytic frameworks and decision trees to make up one's mind if diagnostic accurateness studies lone are acceptable include:

- Are the extra cases detected by the new, more sensitive test similarly responsive to treatment every bit are those identified by the older test?

- Are trials bachelor that selected patients using the new exam?

- Do trials assess whether the new exam results predict response?

- If available trials selected but patients assessed with the quondam test, do extra cases identified with the new test represent the aforementioned spectrum or illness subtypes as trial participants?

- Are tests' cases subsequently confirmed by same reference standard?

- Does the new test change the definition or spectrum of disease (e.g., past finding disease at an earlier phase)?

- Is at that place heterogeneity of test accuracy and treatment effect (i.e., do accurateness and handling furnishings vary sufficiently co-ordinate to levels of a patient characteristic to change the comparison of the old and new test)?

When the clinical utility of an older comparator test has been established, and the start five questions tin can all be answered in the affirmative, then diagnostic accuracy evidence alone may be sufficient to support conclusions nigh a new test.

Principle 5: Other frameworks may be helpful.

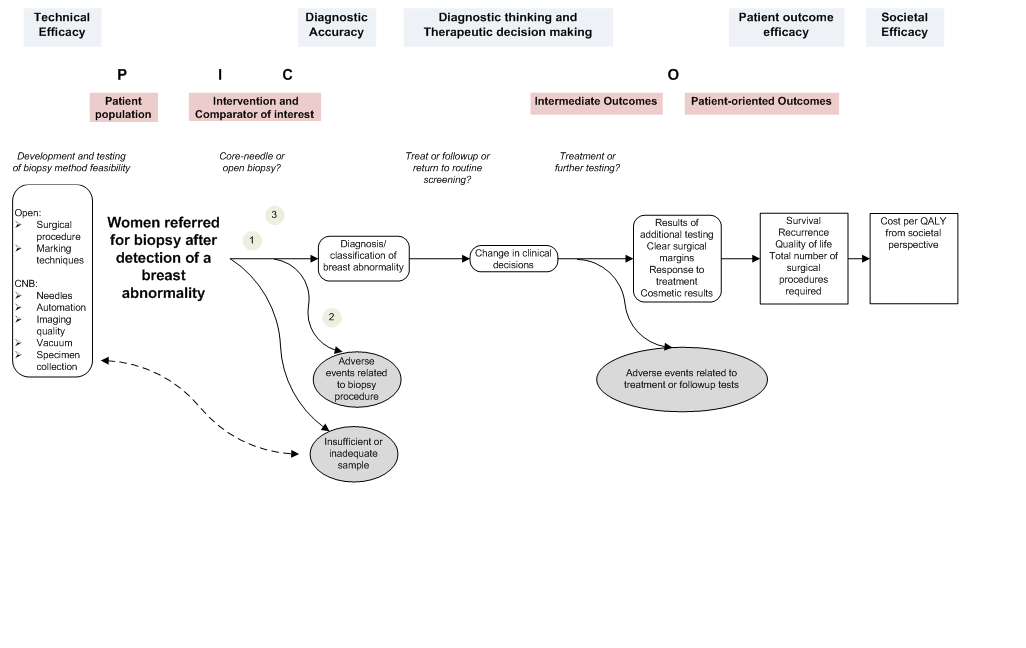

Various other frameworks (mostly termed "organizing frameworks," as described briefly in the Introduction to this Medical Test Methods Guide [Chapter 1]) relate to categorical features of medical tests and medical test studies. Lijmer and colleagues reviewed the different types of organizational frameworks and found 19 frameworks, which generally classify medical exam research into 6 unlike domains or phases, including technical efficacy, diagnostic accurateness, diagnostic thinking efficacy, therapeutic efficacy, patient outcome, and societal aspects.thirteen

These frameworks serve a variety of purposes. Some researchers, such as Van den Bruel and colleagues, consider frameworks every bit a hierarchy and a model for how medical tests should be studied, with one level leading to the adjacent (i.east., success at each level depends on success at the preceding level).xiv Others, such as Lijmer and colleagues, have argued that "The evaluation frameworks can exist useful to distinguish betwixt study types, but they cannot be seen equally a necessary sequence of evaluations. The evaluation of tests is most likely non a linear but a circadian and repetitive procedure."13

We propose that rather than being a hierarchy of evidence, organizational frameworks should categorize key questions and suggest which types of studies would be almost useful for the review. They may guide the clustering of studies, which may better the readability of a review document. No specific framework is recommended, and indeed the categories of most organizational frameworks at least approximately line upwards with the analytic framework and the PICO(TS) elements every bit shown in Figure 2–2.

Figure 2–two. Instance of an analytical framework inside an overarching conceptual framework in the evaluation of breast biopsy techniques*

*The numbers in the figure draw where the three key questions are located within the flow of the analytical framework.

Illustrations

To illustrate the principles higher up, we describe three examples. In each instance, the initial claim was at least somewhat ambiguous. Through the use of the PICOTS typology, the analytic framework, and unproblematic decision trees, the systematic reviewers worked with stakeholders to clarify the objectives and analytic arroyo (Table 2–1). In addition to the examples described here, the AHRQ Effective Health Care Programme Spider web site (https://effectivehealthcare.ahrq.gov) offers gratuitous access to ongoing and completed reviews containing specific applications of the PICOTS typology and analytic frameworks.

| Total-Field Digital Mammography | HER2 | PET | |

|---|---|---|---|

| FDG = fluorodeoxyglucose; FFDM = full-field digital mammography; HER2 = human epidermal growth factor receptor 2; | |||

| Full general topic | FFDM to replace SFM in breast cancer screening (Figure 2–3). | HER2 gene amplication assay as add-on to HER2 protein expression assay (Figure ii–4). | PET as triage for breast biopsy (Effigy 2–five). |

| Initial cryptic claim | FFDM may exist a useful alternative to SFM in screening for chest cancer. | HER2 gene amplification and protein expression assays may complement each other as means of selecting patients for targeted therapy. | PET may play an adjunctive role to breast exam and mammography in detecting chest cancer and selecting patients for biopsy. |

| Primal concerns suggested by PICOTS, analytic framework, and conclusion tree | Cardinal statistics: sensitivity, diagnostic yield, call up rate; similar types of management decisions and outcomes for index and comparator examination-and-treat strategies. | Key statistics: proportion of individuals with intermediate/ equivocal HER2 protein expression results who have HER2 gene amplification; primal outcomes are related to effectiveness of HER2-targeted therapy in this subgroup. | Primal statistics: negative predictive value; key outcomes to exist contrasted were benefits of avoiding biopsy versus harms of delaying initiation of treatment for undetected tumors. |

| Refined claim | In screening for breast cancer, estimation of FFDM and SFM would be similar, leading to similar management decisions and outcomes; FFDM may take a similar recall rate and diagnostic yield at least as high equally SFM; FFDM images may be more expensive, but easier to dispense and store . | Among individuals with localized breast cancer, some may have equivocal results for HER2 protein overexpression only have positive HER2 gene amplification, identifying them as patients who may benefit from HER2-targeted therapy simply otherwise would take been missed. | Among patients with a palpable breast mass or suspicious mammogram, if FDG PET is performed before biopsy, those with negative scans may avoid the adverse events of biopsy with potentially negligible risk of delayed treatment for undetected tumor. |

| Reference | Blueish Cross and Blueish Shield Association Engineering science Evaluation Center, 200215 | Seidenfeld et al., 200816 | Samson et al., 200217 |

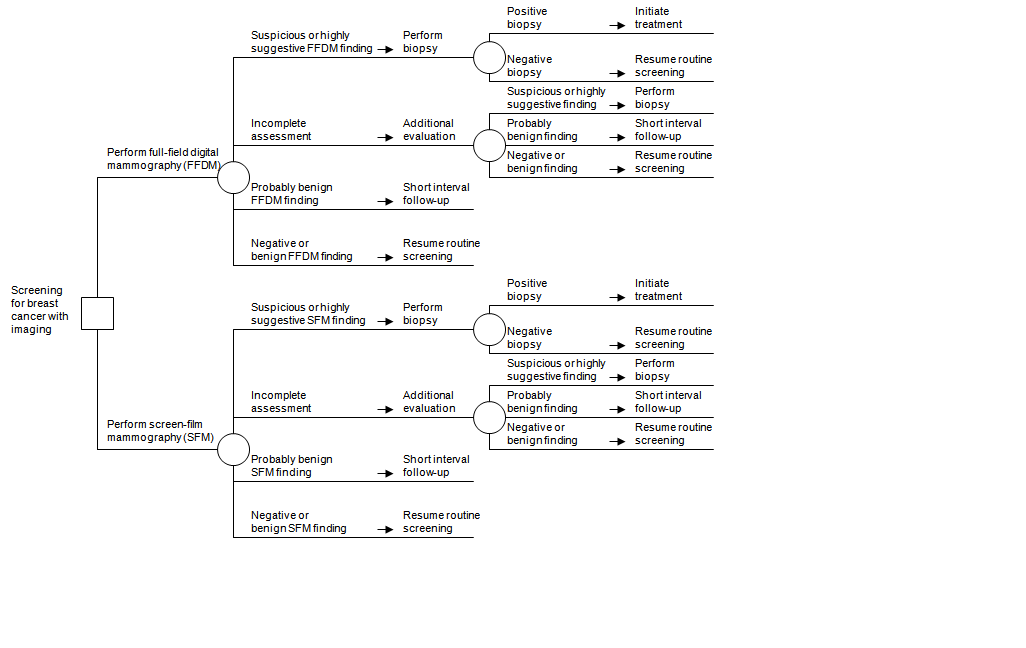

The first example concerns total-field digital mammography (FFDM) as a replacement for screen-motion-picture show mammography (SFM) in screening for chest cancer; the review was conducted by the Blue Cross and Blue Shield Association Technology Evaluation Center.15 Specifying PICOTS elements and constructing an analytic framework were straightforward, with the latter resembling Figure two–ii in class. In addition, with stakeholder input a unproblematic decision tree was drawn (Figure two–3) which revealed that the management decisions for both screening strategies were similar, and that therefore downstream treatment outcomes were not a disquisitional effect. The conclusion tree likewise showed that the fundamental indices of test operation were sensitivity, diagnostic yield, and recall charge per unit. These insights were useful as the project moved to abstracting and synthesizing the prove, which focused on accuracy and recall rates. In this example, the reviewers concluded that FFDM and SFM had comparable accurateness and led to comparable outcomes; that, even so, storing and manipulating images was much easier for FFDM than for SFM.

Figure 2–3. Replacement exam case: full-field digital mammography versus screen-motion picture mammography*

*Figure taken from Bluish Cross and Blue Shield Association Engineering Evaluation Center, 2002.14

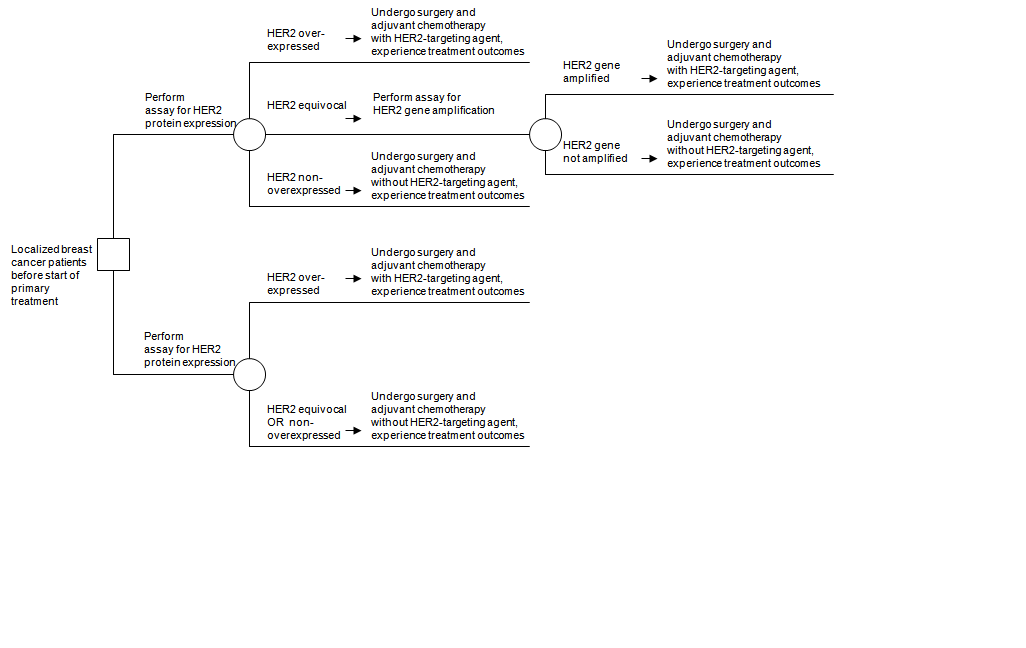

The second case concerns apply of the human epidermal growth factor receptor 2 (HER2) gene amplification assay later the HER2 protein expression assay to select patients for HER2-targeting agents as office of adjuvant therapy among patients with localized breast cancer.16 The HER2 gene amplification assay has been promoted as an add-on to the HER2 poly peptide expression assay. Specifically, individuals with equivocal HER2 protein expression would exist tested for amplified HER2 factor levels; in improver to those with increased HER2 protein expression, patients with elevated levels by amplification assay would also receive adjuvant chemotherapy that includes HER2-targeting agents. Over again, PICOTS and an analytic framework were developed, establishing the basic key questions. In addition, the authors constructed a decision tree (Figure ii–4) that made it clear that the treatment outcomes afflicted by HER2 poly peptide and gene assays were at least equally important as the exam accuracy. While in the commencement case the reference standard was actual diagnosis by biopsy, hither the reference standard is the distension assay itself. The decision tree identified the fundamental accurateness alphabetize every bit the proportion of individuals with equivocal HER2 protein expression results who have positive amplified HER2 cistron assay results. The tree practice also indicated that 1 fundamental question must exist whether HER2-targeted therapy is effective for patients who had equivocal results on the protein assay but were subsequently found to take positive amplified HER2 cistron assay results.

Effigy 2–4. Add-on test case: HER2 protein expression assay followed by HER2 gene amplification assay to select patients for HER2-targeted therapy*

HER2 = human epidermal growth factor receptor 2

*Effigy taken from Seidenfeld et al., 2008.15

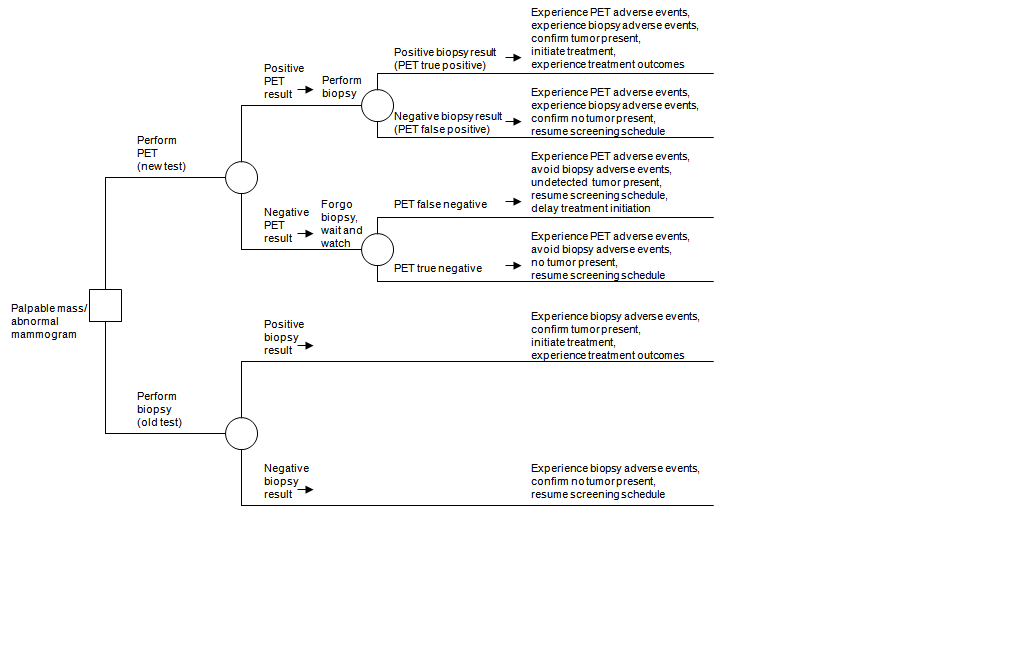

The 3rd example concerns use of fluorodeoxyglucose positron emission tomography (FDG PET) as a guide to the decision to perform a chest biopsy on a patient with either a palpable mass or an abnormal mammogram.17 Simply patients with a positive PET scan would be referred for biopsy. Tabular array 2–1 shows the initial ambiguous merits, lacking PICOTS specifications such as the mode in which testing would exist washed. The analytic framework was of limited value, every bit several possible relevant testing strategies were not represented explicitly in the framework. The authors synthetic a decision tree (Figure 2–v). The testing strategy in the lower portion of the decision tree entails performing biopsy in all patients, while the triage strategy uses a positive PET finding to rule in a biopsy and a negative PET finding to rule out a biopsy. The decision tree illustrates that the fundamental accuracy alphabetize is negative predictive value: the proportion of negative PET results that are truly negative. The tree also reveals that the key contrast in outcomes involves whatsoever harms of delaying treatment for undetected cancer when PET is falsely negative versus the benefits of safely avoiding agin furnishings of the biopsy when PET is truly negative. The authors concluded that there is no net benign impact on outcomes when PET is used as a triage exam to select patients for biopsy amidst those with a palpable breast mass or suspicious mammogram. Thus, estimates of negative predictive values suggest that there is an unfavorable tradeoff between fugitive the adverse effects of biopsy and delaying treatment of an undetected cancer.

Figure 2–v. Triage test instance: positron emission tomography (PET) to decide whether to perform breast biopsy among patients with a palpable mass or abnormal mammogram*

PET = positron emission tomography

*Figure taken from Samson et al., 2002.17

This case illustrates when a more formal decision analysis may exist useful, specifically when a new test has higher sensitivity but lower specificity than the erstwhile test, or vice versa. Such a situation entails tradeoffs in relative frequencies of true positives, false negatives, false positives, and true negatives, which decision assay may help to quantify.

Summary

The immediate goal of a systematic review of a medical test is to determine the wellness impacts of use of the examination in a detail context or ready of contexts relative to i or more alternative strategies. The ultimate goal is to produce a review that promotes informed decisionmaking.

Cardinal points are:

- Reaching the in a higher place-stated goals requires an interactive and iterative process of topic evolution and refinement aimed at understanding and clarifying the merits for a exam. This work should be done in conjunction with the principal users of the review, experts, and other stakeholders.

- The PICOTS typology, analytic framework, uncomplicated decision trees, and other organizing frameworks are all tools that can minimize ambivalence, help identify where review resources should be focused, and guide the presentation of results.

- Sometimes it is sufficient to focus but on accuracy studies. For example, diagnostic accuracy may exist sufficient when the new test is as sensitive and specific as the one-time exam and the new test has advantages over the onetime test, such equally having fewer agin furnishings, being less invasive, being easier to utilize, providing results more than chop-chop or costing less.

References

- Institute of Medicine, Division of Health Sciences Policy, Division of Health Promotion and Disease Prevention, Commission for Evaluating Medical Technologies in Clinical Utilize. Assessing medical technologies. Washington, DC: National University Press; 1985. Chapter 3: Methods of applied science cess. pp. fourscore-90.

- Helfand M and Balshem H. AHRQ Serial Newspaper 2: Principles for developing guidance: AHRQ and the effective wellness-care programme. J Clin Epidemiol. 2010;63(5):484-90.

- Whitlock EP, Lopez SA, Chang Due south, et al. AHRQ Series Paper 3: Identifying, selecting, and refining topics for comparative effectiveness systematic reviews: AHRQ and the Constructive Health-Intendance program.J Clin Epidemiol. 2010;63(5):491-501.

- Matchar DB, Patwardhan 1000, Sarria-Santamera A, et al. Developing a Methodology for Establishing a Statement of Work for a Policy-Relevant Technical Analysis. Technical Review xi. (Prepared by the Duke Evidence-based Practice Center under Contract No. 290-02-0025.) AHRQ Publication No. 06-0026. Rockville, MD: Agency for Healthcare Inquiry and Quality; Jan 2006.

- Sarria-Santamera A, Matchar DB, Westermann-Clark EV, et al. Evidence-based practice heart network and health engineering assessment in the U.s.: bridging the cultural gap. Int J Technol Assess Health Intendance. 2006;22(ane):33-8.

- Patwardhan MB, Sarria-Santamera A, Matchar DB, et al. Improving the process of developing technical reports for health care decision makers: using the theory of constraints in the evidence-based practice centers. Int J Technol Assess Health Care. 2006;22(one):26-32.

- Woolf SH. An organized analytic framework for practice guideline evolution: using the analytic logic as a guide for reviewing show, developing recommendations, and explaining the rationale. In: McCormick KA, Moore SR, Siegel RA, editors. Methodology perspectives: clinical practice guideline development. Rockville, MD: U.S. Department of Health and Human Services, Public Health Service, Agency for Wellness Intendance Policy and Enquiry; 1994. p. 105-13.

- Harris RP, Helfand One thousand, Woolf SH, et al. Current methods of the US Preventive Services Task Force: a review of the procedure. Am J Prev Med. 2001;20(3 Suppl):21-35.

- Lord SJ, Irwig L, Bossuyt PM. Using the principles of randomized controlled trial pattern to guide test evaluation. Med Decis Making. 2009;29(5):E1-E12. Epub2009 Sep 22.

- Feussner JR, Matchar DB. When and how to written report the carotid arteries. Ann Intern Med. 1988;109(10):805-18.

- Blakeley DD, Oddone EZ, Hasselblad V, et al. Noninvasive carotid artery testing. A meta-analytic review. Ann Intern Med. 1995;122(five):360-vii.

- Lord SJ, Irwig L, Simes J. When is measuring sensitivity and specificity sufficient to evaluate a diagnostic test, and when practice we demand a randomized trial? Ann Intern Med. 2006;144(eleven):850-5.

- Lijmer JG, Leeflang Yard, Bossuyt PM. Proposals for a phased evaluation of medical tests. Med Decis Making. 2009;29(5):E13-21.

- Van den Bruel A, Cleemput I, Aertgeerts B, et al. The evaluation of diagnostic tests: bear witness on technical and diagnostic accuracy, impact on patient issue and cost-effectiveness is needed. J Clin Epidemiol. 2007;sixty(11):1116-22.

- Blue Cross and Blue Shield Association Technology Evaluation Center (BCBSA TEC). Full-field digital mammography. Book 17, Number 7, July 2002.

- Seidenfeld J, Samson DJ, Rothenberg BM, et al. HER2 Testing to Manage Patients With Chest Cancer or Other Solid Tumors. Evidence Report/Engineering science Assessment No. 172. (Prepared by Blueish Cantankerous and Bluish Shield Association Engineering Evaluation Center Evidence-based Practice Center, under Contract No. 290-02-0026.) AHRQ Publication No. 09-E001. Rockville, Md: Bureau for Healthcare Research and Quality. Nov 2008. https://www.ahrq.gov/downloads/pub/bear witness/pdf/her2/her2.pdf. Accessed January 10, 2012.

- Samson DJ, Flamm CR, Pisano ED, et al. Should FDG PET exist used to decide whether a patient with an abnormal mammogram or breast finding at concrete examination should undergo biopsy? Acad Radiol. 2002;9(7):773-83.

Acknowledgements

Acknowledgements: We wish to thank David Matchar and Stephanie Chang for their valuable contributions.

Funding: Funded past the Agency for Wellness Care Research and Quality (AHRQ) under the Constructive Health Care Program.

Disclaimer: The findings and conclusions expressed here are those of the authors and do not necessarily represent the views of AHRQ. Therefore, no statement should be construed as an official position of AHRQ or of the U.S. Department of Health and Human being Services.

Public domain notice: This certificate is in the public domain and may be used and reprinted without permission except those copyrighted materials that are conspicuously noted in the certificate. Further reproduction of those copyrighted materials is prohibited without the specific permission of copyright holders.

Accessibility: Persons using assistive engineering may not be able to fully admission data in this written report. For assistance contact EPC@ahrq.hhs.gov.

Conflicts of interest: None of the authors has whatever affiliations or involvement that conflict with the information in this affiliate.

Corresponding author: David Samson, M.S., Manager, Comparative Effectiveness Enquiry, Technology Evaluation Center, Blue Cross and Blueish Shield Clan, 1310 Grand Street NW., Washington, DC 20005. Phone: 202–626–4835. Fax 845–462–4786. Email: david.samson@bcbsa.com.

Suggested citation: Samson D, Schoelles KM. Developing the topic and structuring systematic reviews of medical tests: utility of PICOTS, analytic frameworks, decision trees, and other frameworks. AHRQ Publication No. 12-EHC073-EF. Chapter 2 of Methods Guide for Medical Test Reviews (AHRQ Publication No. 12-EHC017). Rockville, Medico: Agency for Healthcare Research and Quality; June 2012. Also published in a special supplement to the Periodical of General Internal Medicine, July 2012.

Source: https://effectivehealthcare.ahrq.gov/products/methods-guidance-tests-topics/methods

0 Response to "How to Construct and Analytical Framework for Asystematic Review"

Postar um comentário